There were similar attacks: CSS-visited-links leaking, cross domain search timing but the vector I am going to describe looks more universal and dangerous.

For sure this is not a critical vulnerability - 9 thousand years to brute force, say, /callback?code=j4JIos94nh (64**10 => 1152921504606846976 checking 25mln/min). Stealing random tokens does not seem feasible.

On the other hand the trick is WontFix. Every website owner can detect:

- if you are user of any other website - github, google, or, maybe, DirtyRussianGirls.com... ?

- if you have access to some private resources/URLs (do you follow some protected twitter account)

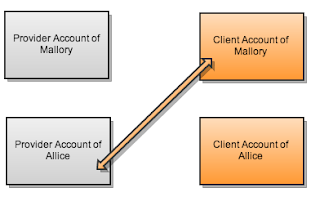

- applications you authorized on any OAuth1/2 provider

- some private bits URL can contain: your Facebook nickname or Vkontakte ID.

I wrote a simple JS function and playground - feel free to use! (Sorry playground can be buggy sometimes, timeouts for Firefox should be longer and direct_history=false)

check_redirect(base, callback, [assigner])

base, String - starting URL. Can redirect to other URL automatically or only with some conditions (user is not logged in).callback, Function - receives one Boolean argument, true when at least one of assigned URLs changed history instantly (URL was guessed properly) and false when history object wasn't updated (no URL guessed and all assignment were "reloading").

assigner, Function - optional callback, can be used for arbitary URL attempts and iterations (bruteforce). For example bruteforcing user id between 0-99:

function(w){

for(var i=0;i < 100;i++){

w.location='http://site.com/user?id='+i+'#';

}

}

By default exploit assigns location=base+'#', making sure no redirect happened at all. Checking if user is logged in on any website is super easy:

check_redirect('http://site.com/private_path',function(result){

alert(result ? 'yes' : 'no');

}).

(/private_path path is supposed to redirect user, in case he is not logged in.

Under the hood.

When you execute cross_origin_window.location=SAME_URL# and SAME_URL is equal to current location, browsers do not reload that frame. But, at the same time, they update 'history' object instantly.

This opens a timing attack on history object:

- Prepare 2 slots in history POINT 1 and POINT 2 that belong to your origin.

cross_origin_window.location="/postback.html#1"

cross_origin_window.location="/postback.html#2" - Navigate cross origin window to BASE_URL

cross_origin_window.location=base_url; - Try to assign location=TRY_URL# and instantly navigate 2 history slots back.

cross_origin_window.location=try_url+'#';

cross_origin_window.history.go(-2) - If the frame changed location to POINT 2 - your TRY_URL was equal to REAL_URL and history object was updated instantly. If it is POINT 1 - TRY_URL was different and it made browser to start page reloading, history object was not changed immediately.

Wait, only Chrome supports cross origin access to "history" property - how can you make it work on other browsers?

Thankfully I found a universal bypass - simply assign a new location POINT 3 which will contain <script>history.go(-3)</script> doing the same job.

Bruteforce in webkit:

Vkontakte (russian social network) http://m.vk.com/photos redirects to http://m.vk.com/photosUSER_ID. We can use map-reduce-technique from my previous Chrome Auditor script extraction attack.

Iterate 1-1kk, 1kk-2kk, 2kk-3kk and see which one returned to POINT 2. Repeat: 2000000-2100000, 2100000-2200000 etc.

It will take a few minutes to brute force certain user id between 1 and 1 000 000. Vkontakte has more than 100 millions of users - around 10 minutes should be enough.

Maybe performance tricks can make bruteforce loop faster (e.g. web workers). So far for(){} loop is only bottle neck here :)

Conclusion

Website owners can track customers' hobbies, facebook friends (predefined) visiting their blogs etc.I have a strong feeling we dont want such information to be disclosed. It should be fixed just like CSS :visited vulnerability was fixed a few years ago.

Please, browsers, change your mind :)

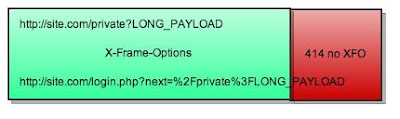

Bonus - 414 and XFO

During the research I found unrelated but interesting attack vector, based on X-Frame-Options detection (Jeremiah wrote about it) and 414 error (URI is too long).Server error pages almost never serve X-Frame-Options header and this trick can be applied for many popular websites using X-Frame-Options.

The picture below makes it clear (LONG_PAYLOAD depends on the server configuration, usually 5000-50000 symbols.):

.png)

.png)